Wang Neuroscience Lab

Projects

Our lab is looking for research participants. Individuals with autism spectrum disorder are particularly welcome to participate. We conduct both eye tracking and fMRI (functional magnetic resonance imaging) experiments to study visual attention and social perception. If you are interested in participating in our research, please contact Shuo Wang.

Investigating the Neural Circuits for Social Attention in Humans

Selective visual attention is one of the most fundamental cognitive functions in humans. Although there is a plethora of literature that uses neuroimaging to probe the neural mechanisms of visual attention, very few studies have investigated visual attention at the single-neuron level in humans.

Frontal target neurons respond before MTL target neurons. (A, B) Example target neurons from the pre-SMA. (C, D) Two target neurons simultaneously recorded in the pre-SMA and MTL. (E) Cumulative firing rate for target neurons from the pre-SMA (dotted lines; n=31 neurons) and MTL (solid lines; n=27 neurons). Shaded area denotes ± SEM across neurons. Red: fixations on targets. Blue: fixations on distractors. Top bars show clusters of time points with a significant difference. Arrows indicate the first time point of the significant cluster. Magenta: MTL neurons. Black: pre-SMA neurons. (F) Difference in cumulative firing rate (calculated from [E]).

Investigating the Single-Neuron Mechanisms of Face Coding and Social Perception

How the brain encodes different face identities is one of the most fundamental and intriguing questions in neuroscience. There are currently two extreme hypotheses: (1) the exemplar-based model proposes that neurons respond in a remarkably selective and abstract manner to particular persons or objects, whereas (2) the axis-based model (a.k.a. feature-based model) posits that neurons distinguish facial features along specific axes (e.g., shape and skin color) in face space. However, a third under-explored coding scheme, the manifold-based coding, may exist in which neurons may encode the perceptual distance (i.e., similarity) between examples of faces at a macro level regardless of their individual features that may distinguish them at a micro level.

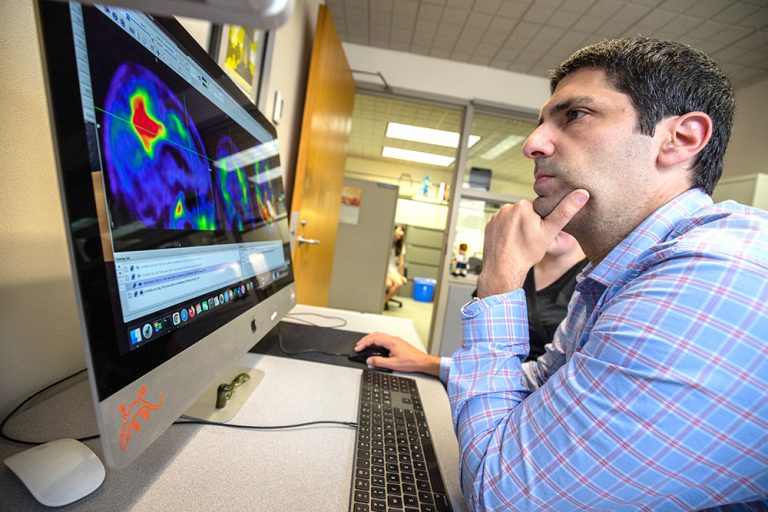

Investigating the Neural Basis for Saliency and Memory Using Natural Scene Stimuli

One key research project in our lab is to use complex natural scene images to study saliency, attention, learning, and memory. In our previous studies, we annotated more than 5,000 objects in 700 well characterized images and recorded eye movements when participants looked at these images (Wang et al., Neuron, 2015).

Model-based eye tracking. We applied a linear support vector machine (SVM) classifier to evaluate the contribution of five general factors in gaze allocation. Feature maps are extracted from the input images and included three levels of features (pixel, object, and semantic-level) together with the image center and the background. We apply a random sampling to collect the training data and train on the ground-truth actual fixation data. The classifier outputs are the saliency weights, showing the relative importance of each feature in predicting gaze allocation.

Investigating the Behavioral and Neural Underpinnings for Aberrant Social Behavior in Autism

People with autism spectrum disorder (ASD) are characterized by impairments in social and communicative behavior and a restricted range of interests and behaviors (DSM-5, 2013). An overarching hypothesis is that the brains of people with ASD have abnormal representations of what is salient, with consequences for attention that are reflected in eye movements, learning, and behavior. In our prior studies, we have shown that people with autism have atypical face processing and atypical social attention. On the one hand, people with autism show reduced specificity in emotion judgment (Wang and Adolphs, Neuropsychologia, 2017), which might be due to abnormal amygdala responses to eyes vs. mouth (Rutishauser et al., Neuron, 2013). On the other hand, people with autism show atypical bottom-up attention to social stimuli during free viewing of natural scene images (Wang et al., Neuron, 2015), impaired top-down attention to social targets during visual search (Wang et al., Neuropsychologia, 2014), and abnormal photos taken for other people, a combination of both bottom-up and top-down attention (Wang et al., Curr Biol, 2016). However, the underlying mechanisms for these profound social dysfunctions in autism remain largely unknown.